Quizipedia. A web quiz game using Wikipedia content

Posted by jimblackler on Nov 11, 2009

Wikipedia is a fantastic resource. As well as a great encyclopedia, it is a gold mine of information placed in an organized structure. I’ve long been interested in how this could be exploited for applications beyond the encyclopedia; something which is allowed for in site’s Free Documentation license.

It seemed to me that a general knowledge quiz would be a superb alternative use for the data, so I set about to make a Wikipedia quiz, or ‘Quizipedia’. Mining Wikipedia would result in an array of questions from geography, history, entertainment, sports and science; in fact across all areas of human endeavor and study across the globe.

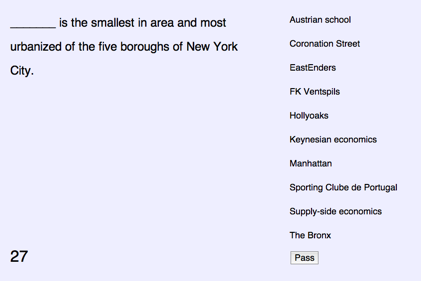

I decided the game would work best in a multiple choice format. The design I chose was to challenge the player to match ten random article names (on the left) against the subject description from the article (on the right). The first sentence of a Wikipedia article usually succinctly describes the subject of the article making it ideal for a quiz question. The player has sixty seconds to complete the task. The player is permitted to ‘pass’ a question, in which case the next question in rotation is shown.

The pass mechanism is in part because the scraper is not perfect. I’d like to think it’s about 90% perfect, but weird or unfair questions can slip through. Allowing the player to pass these questions until they can be answered by elimination means the quiz is not ruined.

The game is here and it is ready to play. However if you’d like to read about how I built it, read on.

There are two parts to the web game. There is a client served using a GWT application served on Google App Engine. This requires a database of questions to work from. Selecting the best questions and effectively scraping them from Wikipedia was the tough part.

Scraper

Using the techniques I worked out for my earlier article I wrote a new scraper to crawl pages from Wikipedia. This was a Java client running on my workstation making HTTP requests and using an sgml processor and XPATH queries to pull out the relevant text. The rate of crawling is no more than 30 articles a minute, which is unlikely to be interpreted as an attack by the web server.

Each page crawl extracts links from the article to used by further searches. It also extracts the opening sentence. All we have to do is strip the name of the article, leaving the reader to guess what is being referred to.

For instance after extraction and stripping bracketed text, the first sentence of the article about the city of Paris reads:

Paris is the capital of France and the country's most populous city.

Simply finding the subject of the article in the opening text, and ‘blanking’ it with underscores can create a feasible question to describe the subject. In this case

______ is the capital of France and the country's most populous city.

The blanking process cannot always be completed. This is because the subject name does not always appear in full in the opening sentence. This means only about 60% of articles can be processed in this way.

In order to maximize the chances of being able to successfully remove the article name from the opening sentence I consider all text strings used to link the article found in the crawl so far. For instance, an article may be linked with the term “United States” or “United States of America”.

Obscurity

One problem I encountered early on was that there is a surprising number of articles on very obscure topics to be found on Wikipedia. Follow the ‘random article’ link and you’ll get a good idea of this. I was happy for the quiz to be about random topics from general knowledge. However to give players a fighting chance they shouldn’t be on topics that they had a reasonable chance of having heard of.

A good signal is the number of ingoing links to the article, and also the number of outgoing links. The latter is the case because topics on popular subjects tend to be well-developed by many editors, and this translates into a lot of links going out. Fortunately both these metrics are available to me during the crawl, allowing me to discard smaller or not well-linked articles.

Alternatives

The multiple choice aspect to the game demands feasible alternatives to each answer. Otherwise it can be obvious which is the correct answer by applying a process of elimination. For instance if the question pertains to a country with particular borders and there is only one country on the list of alternative answers, the correct answer is obvious. I wanted to include in each quiz a number of subjects that could reasonably be confused without close consideration of the question. So if the answer was ‘Paris’, the user may also be presented with ‘Lyon’ or ‘Brussels’.

This was the most challenging part of the scraping process. I investigated a number of ways to discover for a given page typical alternative answers that could be presented. These included sharing a large proportion of incoming or outgoing links. The problem with this is the computation required to match link fingerprints globally – just a few hours of scraping accumulates 5 million links between pages. Fortunately a really good signal turned out to be when the links appear together in the same lists of tables in Wikipedia. There are a surprising number of lists to be found in Wikipedia articles, and they very frequently group articles together of similar type. Identifying where articles appear together in lists and ensuring it is likely that they will be placed together in quizzes makes the game tougher and hopefully more compelling.

That’s a brief description on how I built Quizipedia. I hope you enjoy the game, and if you have observations or feedback please leave it on the comments of this page.

[…] random-article function. Don’t judge me. I’m obviously not the only one. Web developer Jim Blackler took my dirty little secret one step further with Quizipedia, the quiz game built from fragments of […]

[…] random-article function. Don’t judge me. I’m obviously not the only one. Web developer Jim Blackler took my dirty little secret one step further with Quizipedia, the quiz game built from fragments of […]

In Ludum Dare, a 48 hour game development competition, a few months ago, we had the theme of ‘wikipedia’ to make our games. This kind of trivia format is the first thing that popped into my mind, as well as others, and although I didn’t finish my game, other wiki trivia games were made.

The games are here: http://www.ludumdare.com/compo/minild-12/?action=preview

Good job!

Excellent implementation of a good idea! I especially like the way you implemented the “alternatives”, it makes it more challenging.

A couple things struck me while playing a few rounds:

– Sometimes the answer is made patently obvious in the question, such as when it gives a pronunciation guide for the word, or when it gives a reordering of a multiple word answer. The latter cases are probably too varied to easily detect, but you could probably detect pronunciation guides fairly easily and just get rid of them.

– Sometimes there is too much ambiguity, example:

“_______ is an American actor of stage and screen.”

I can’t think of a creative way to detect cases like these besides ignoring first sentences that are shorter than a certain amount of characters. But even that wouldn’t be very reliable.

Anyway, great work, it’s a fun time-waster!

Oh, I should add that I thought the multiple-choice format was a good choice, since it often helps players get around the ambiguity I mentioned.

Ehm, isn’t the subject description on the left and article names on the right?

Anyway, cool quiz! :)

My friend and I created a game based on this idea back in 2005. We called it Wikitrivia and it can be found at http://www.wikitrivia.net/ — we use an input form where the first letter is provided instead of categories though. Good luck with your game, guess the idea is in the air!

(categories = multiple choice answers, that is)

[…] is a fast-paced trivia game by one Jim Blackler, that uses Wikipedia articles as a base. Via Waxy, ya know. Posted by bschlog Filed in Neato […]

[…] part is how Blackler went about finding the the articles to use, which he explains in a post about how he made it. For example, he used links within Wikipedia to determine whether an article would be too […]

[…] part is how Blackler went about finding the the articles to use, which he explains in a post about how he made it. For example, he used links within Wikipedia to determine whether an article would be too […]

The last two correct answers seem to always been in reverse alphabetical order, e.g. Republic then Baseball, Kosovo then Christianity, etc.

What could be done to improve the randomness of questions?

Nice idea! I also made a small flash-game, which uses the wikimedia api. It works great, but it would be nice if I could somehow make use of categories instead of just random concepts.

It’s WikiWords. The object is click away the non-related concepts in time.

I am curious about the popularity of this game – do you get a lot of visitors?

Thanks

very interested in this….seems the link doesn’t work anymore.